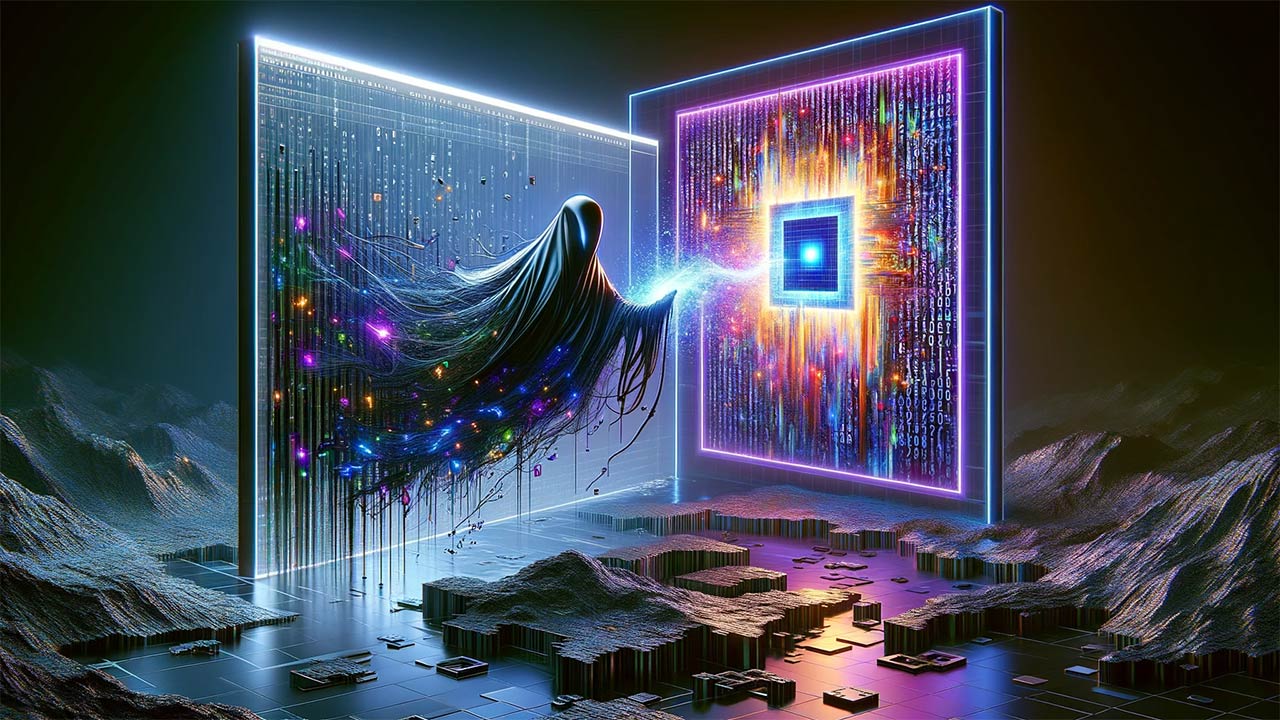

Image created using DALL·E 3 with this prompt: The AI poisoning tool Nightshade, which protects digital artwork from being used to train generative AI models without permission, racked up more than 250,000 downloads in just five days. The software operates by tagging images at the pixel level using the open-source machine learning framework Pytorch. These tags are not obvious to human viewers, but they cause AI models to misinterpret the content of the images, which can lead to the generation of incorrect or nonsensical images when these models are prompted to create new content – hence the concept of a “poisoned” image. Create an image that encapsulates the idea of Nightshade — undetectable code that “poisons” AI-generated images. Aspect ratio: 16×9.

The AI poisoning tool Nightshade, which protects digital artwork from being used to train generative AI models without permission, racked up more than 250,000 downloads in just five days.

The software operates by tagging images at the pixel level using the open-source machine learning framework Pytorch. These tags are not obvious to human viewers, but they cause AI models to misinterpret the content of the images, which can lead to the generation of incorrect or nonsensical images when these models are prompted to create new content – hence the concept of a “poisoned” image.

The tool was developed as part of the Glaze Project, led by Professor Ben Zhao at the University of Chicago, and is a response to concerns from artists and creators about their work being used by AI without consent or compensation. Nightshade is available for free, which aligns with the project’s goal to increase the cost of training on unlicensed data and making licensing images from creators a more attractive option for AI companies.

The project has been funded through university support, traditional foundations, and government grants, and there is no intention of commercializing the project’s intellectual property. Artists who are often burdened with subscription fees for creative software have particularly welcomed this approach; free is very “pro artist”!

The enthusiastic response to Nightshade underscores how many human artists feel about having their art used without permission, but this is a complex issue. Ask an artist how they feel about having an art class study their work; in most cases you’ll get a different response. That said, Nightshade gives artists a way to protect their work from unpaid AI training until intellectual property regulations catch up… which won’t be any time soon.

Author’s note: This is not a sponsored post. I am the author of this article and it expresses my own opinions. I am not, nor is my company, receiving compensation for it. This work was created with the assistance of various generative AI models.